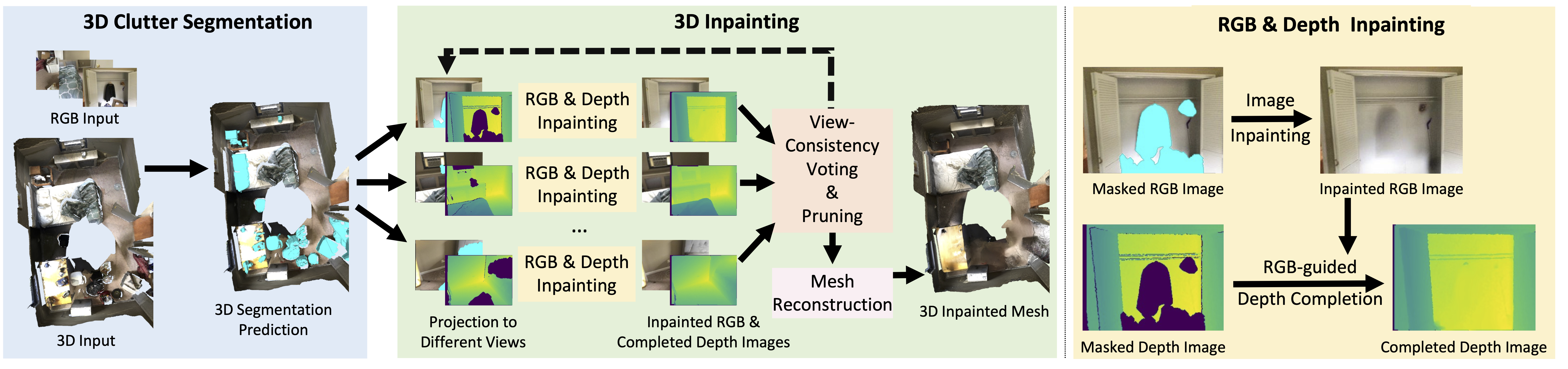

Method Overview

Removing clutter from scenes is essential in many applications, ranging from privacy-concerned content filtering to data augmentation. In this work, we present an automatic system that removes clutter from 3D scenes and inpaints with coherent geometry and texture.

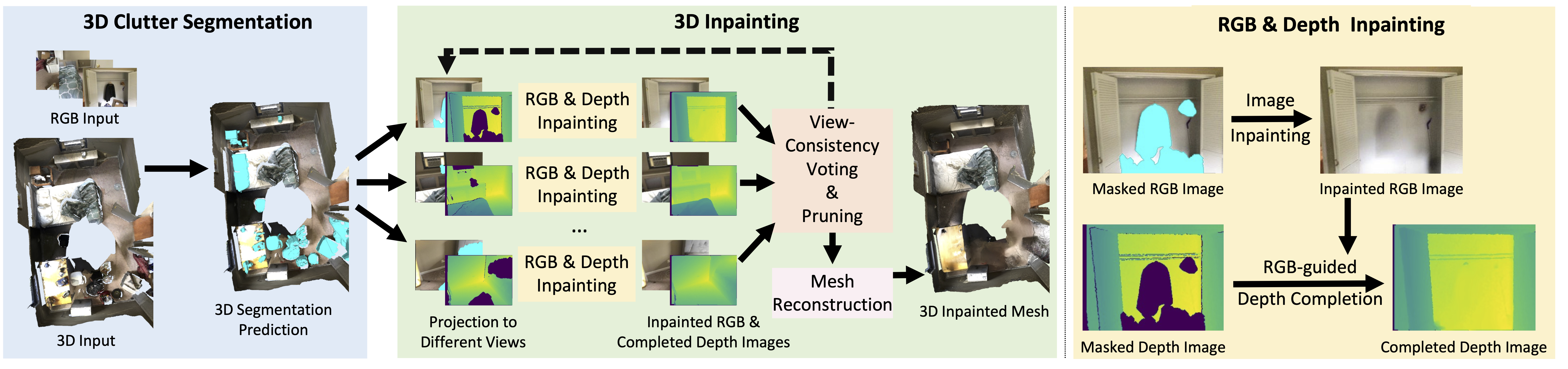

We propose techniques for its two key components: 3D segmentation from shared properties and 3D inpainting, both of which are important porblems. The definition of 3D scene clutter (frequently-moving objects) is not well captured by commonly-studied object categories in computer vision. To tackle the lack of well-defined clutter annotations, we group noisy fine-grained labels, leverage virtual rendering, and impose an instance-level area-sensitive loss. Once clutter is removed, we inpaint geometry and texture in the resulting holes by merging inpainted RGB-D images. This requires novel voting and pruning strategies that guarantee multi-view consistency across individually inpainted images for mesh reconstruction.

Experiments on ScanNet and Matterport3D dataset show that our method outperforms baselines for clutter segmentation and 3D inpainting, both visually and quantitatively.

@inproceedings{Wei:2023:CDA,

author = "Fangyin Wei and Thomas Funkhouser and Szymon Rusinkiewicz",

title = "Clutter Detection and Removal in {3D} Scenes with View-Consistent Inpainting",

booktitle = "International Conference on Computer Vision (ICCV)",

year = "2023",

month = oct

}